Motivation

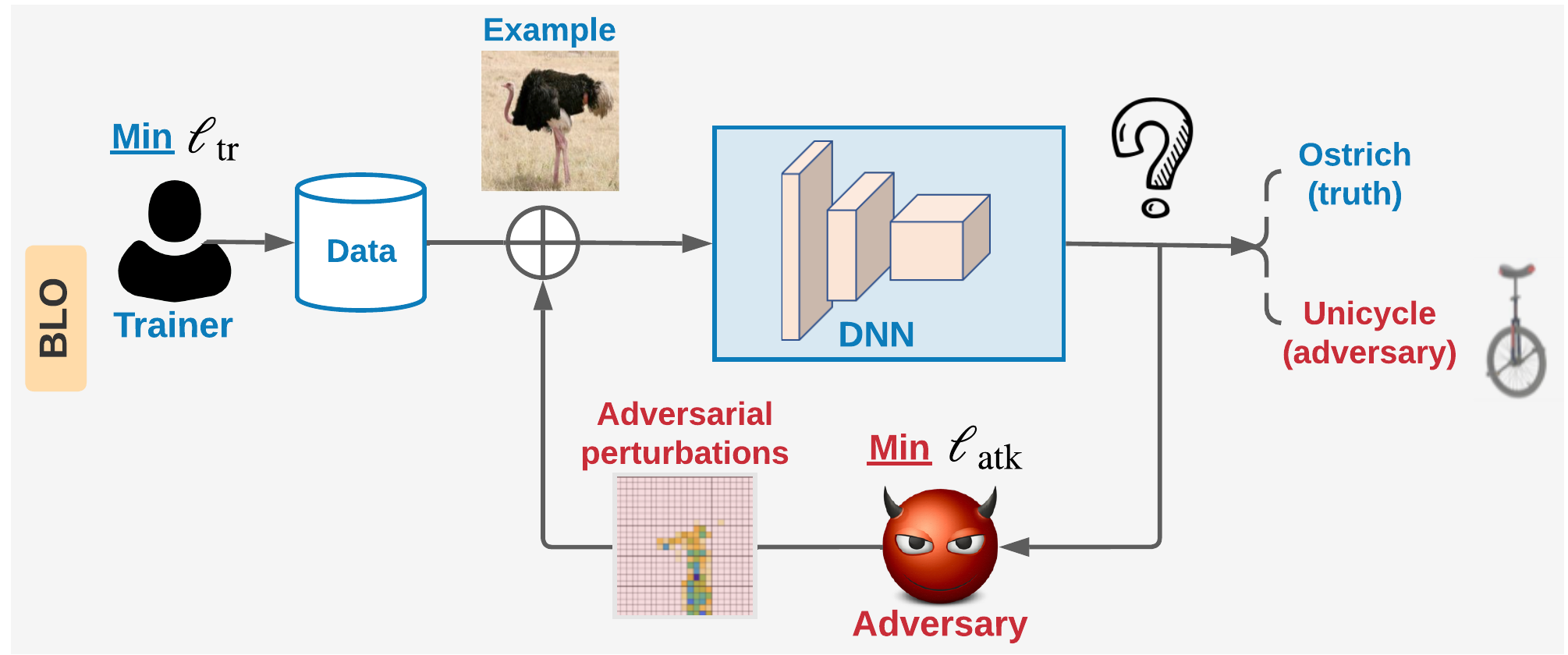

Adversarial training (AT) is a widely recognized defense mechanism to gain the robustness of deep neural networks against adversarial attacks. However, the conventional MMO method makes AT hard to scale. Thus, Fast-AT and other recent algorithms attempt to simplify MMO by replacing its maximization step with the single gradient sign-based attack generation step. Although easy to implement, FAST-AT lacks theoretical guarantees, and its empirical performance is unsatisfactory due to the issue of robust catastrophic overfitting when training with strong adversaries. Moreover, there has been no theoretical guarantee for the optimization algorithms used in FAST-AT. Given the limitations, we ask:

Fast Robust Training: Not Enough!

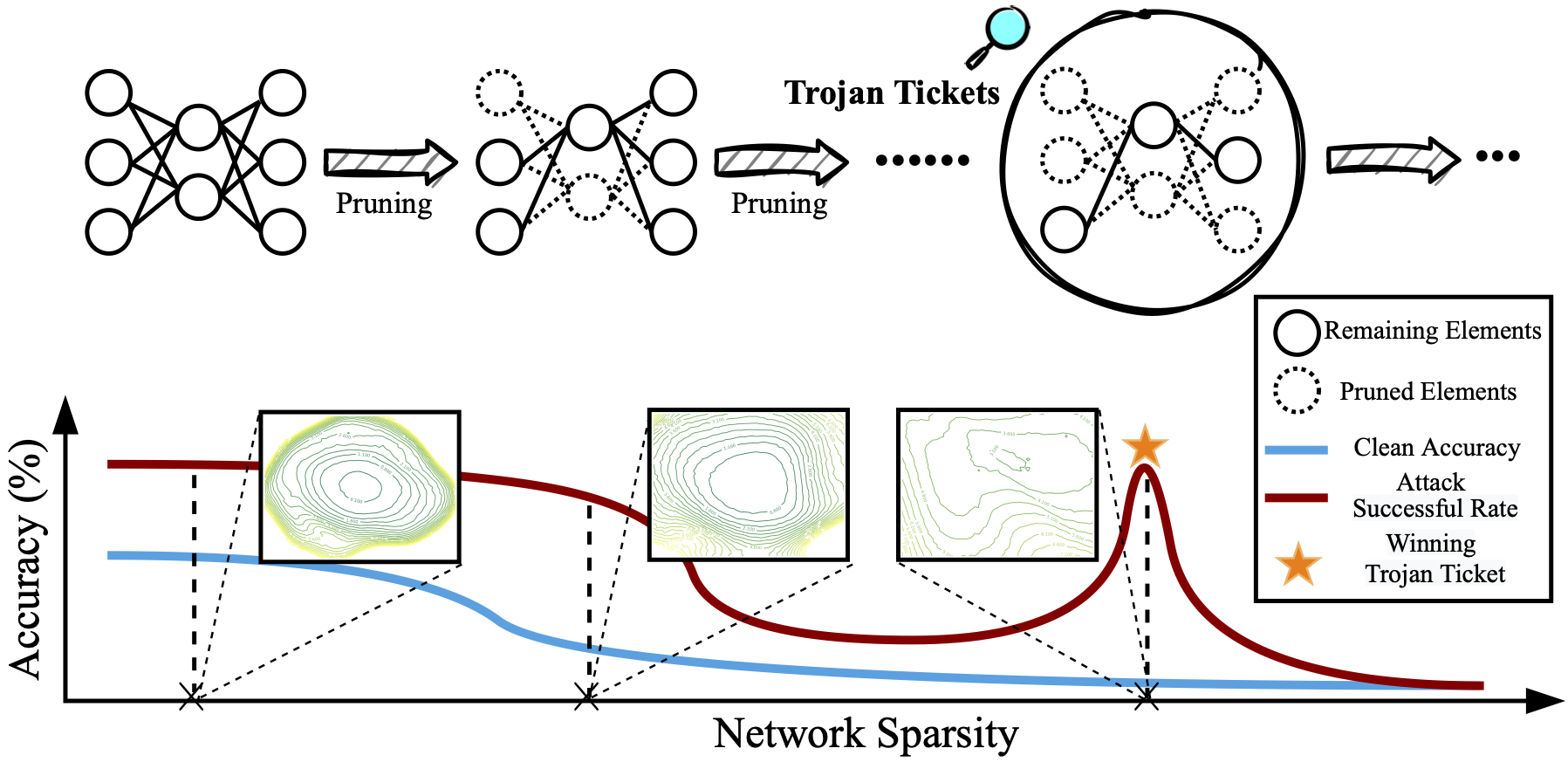

Trojan features learned by backdoored attacks are significantly more stable against pruning than benign features. Therefore, Trojan attacks can be uncovered through the pruning dynamics of the Trojan model.

Leveraging LTH-oriented iterative magnitude pruning (IMP), the ‘winning Trojan Ticket’ can be discovered, which preserves the Trojan attack performance while retaining chance-level performance on clean inputs.

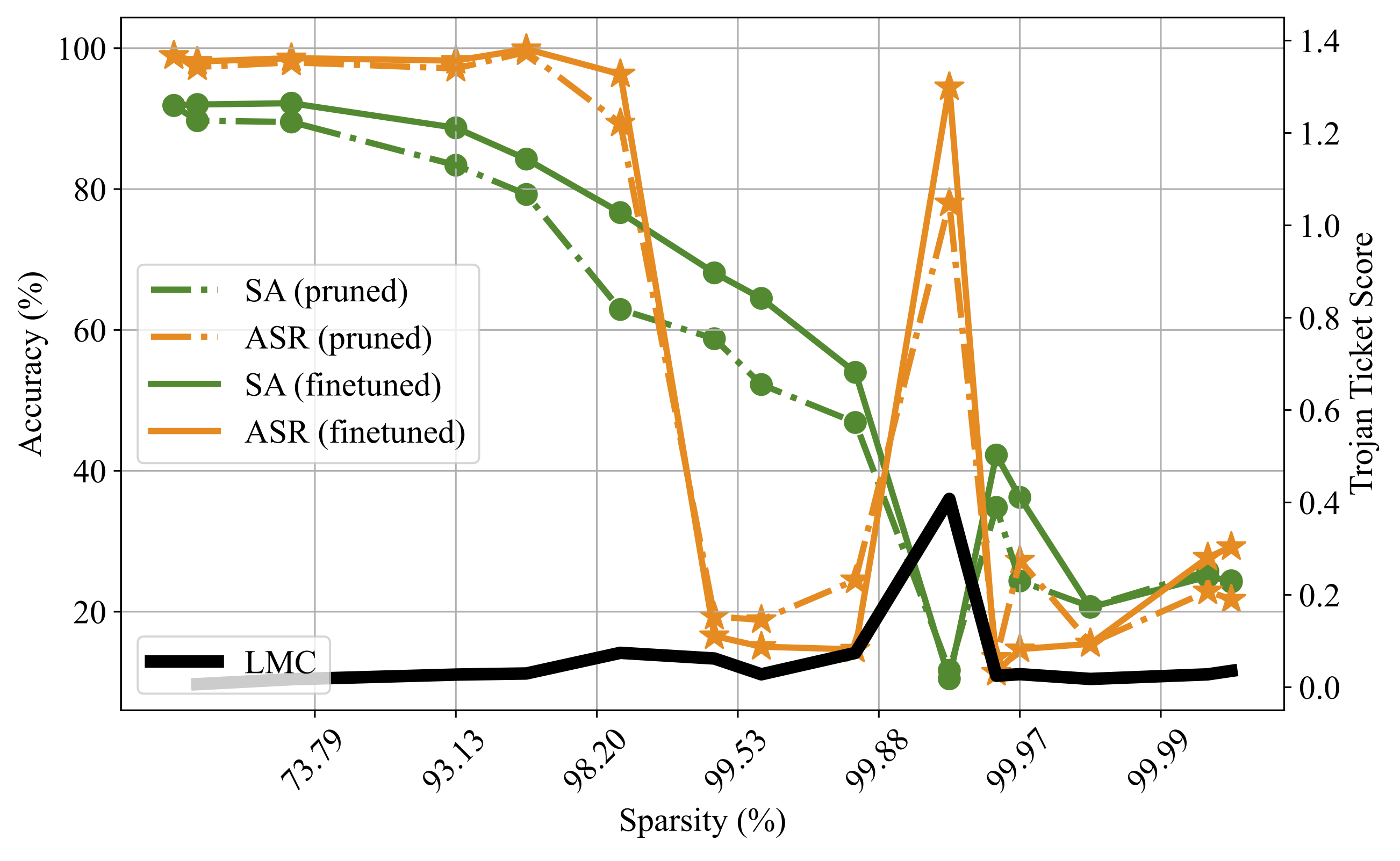

Thus, the existence of the ‘winning Trojan Ticket’ could serve as an indicator of Trojan attacks. However, in real-world applications, it is hard for the users to acquire the ASR (namely the red curve in Figure 1), as the attack information is transparent to the users. Thus, we need to find a substitute indicator for ASR, which does not require any attack information or even clean data.

The winning Trojan ticket can be detected by our proposed linear model connectivity (LMC)-based Trojan score.

Trojan Score: Linear Mode Connectivity-based Trojan Indicator

We adopt Linear Mode Connectivity [2] (LMC) to measure the stability of the Trojan ticket v.s. the -step finetuned Trojan ticket .

We define the Trojan Score as

where the first term denotes LMC and the second term an error baseline. denotes the training error of the model .

A sparse network with the peak Trojan Score maintains the highest ASR in the extreme pruning regime and is termed as the Winning Trojan Ticket.

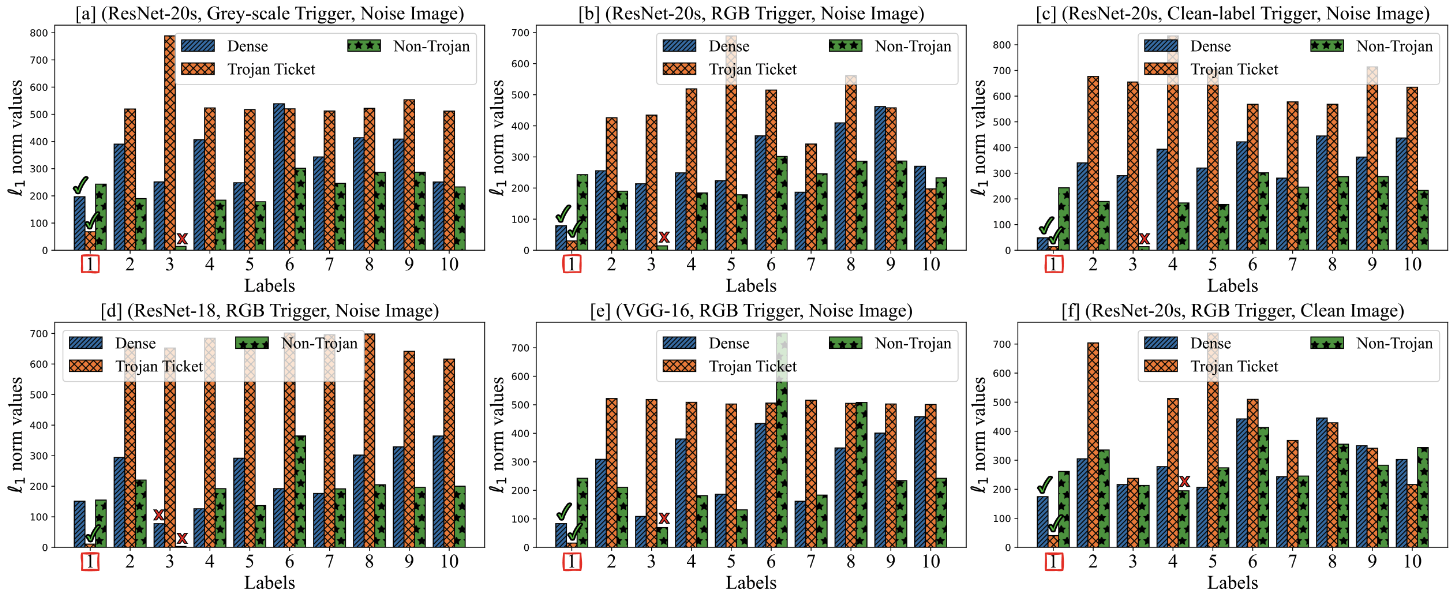

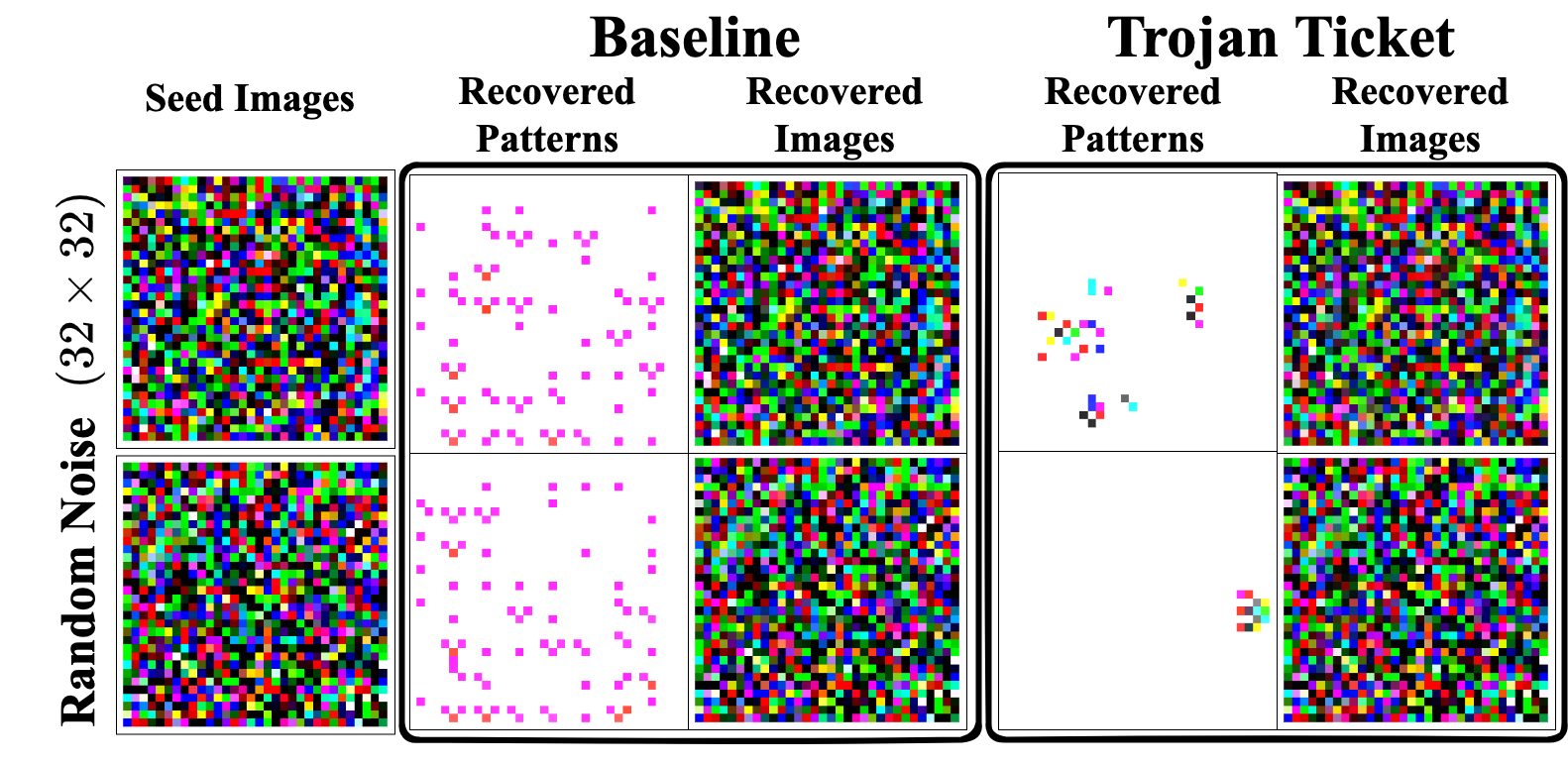

Backdoor Trigger Reverse Engineering

Trigger Reverse Engineer

Citation

@inproceedings{chen2022quarantine,

title = {Quarantine: Sparsity Can Uncover the Trojan Attack Trigger for Free},

author = {Chen, Tianlong and Zhang, Zhenyu and Zhang, Yihua and Chang, Shiyu and Liu, Sijia and Wang, Zhangyang},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages = {598--609},

year = {2022}

}

Reference

[1] Jonathan Frankle et al. “The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks.” ICLR 2019.

[2] Jonathan Frankle et al. “Linear Mode Connectivity and the Lottery Ticket Hypothesis.” ICML 2020.

[3] Ren Wang et al. “Practical detection of trojan neural networks: Data-limited and data-free cases.” ECCV 2020.